A recent leak of internal Google API documentation has caused a major stir within the SEO industry and among the broader public. The documents contain valuable information about the inner workings of Google’s search engine, shedding light on methodologies and algorithms that had previously remained inaccessible to outsiders. For SEO professionals, this disclosure provides new opportunities to understand how Google evaluates and ranks websites. For the general public, it confirms long-standing assumptions and speculations about how their data is collected and processed when using the world’s most popular search engine.

The leak occurred on May 5, when an anonymous source released 2,500 pages of API documentation containing 14,014 attributes, claiming the files were obtained from Google’s internal data repository. The source stated that the authenticity of the leak had been verified by several former Google employees, who also provided additional confidential details about the search engine’s internal operations.

After this information surfaced, multiple independent experts conducted validation checks to confirm the documents’ legitimacy. Technical specialists and former Google engineers reviewed the materials and concluded that the leak was genuine. One of the analysts examined the documents in depth and confirmed that they contained a significant amount of previously undisclosed information about Google’s internal systems.

This makes the incident not only verified as authentic but also the largest leak in Google’s history, offering unprecedented insight into the core mechanisms of the world’s leading search engine.

- What the Google Leak Revealed

- NavBoost и Glue

- Practical Application of NavBoost

- Chrome Data: How Clickstream Information Is Used in Google’s Ranking Algorithms

- How Chrome Click Data Is Applied in Search Algorithms

- The Sandbox for New Websites

- Separate Ranking for Subdomains

- Domain Age

- Domain authority

- Whitelisting for Specific Query Categories

- Anchor Mismatch

- How Anchor Mismatch Works:

- Quality Raters and the EWOK System

- Title Matching Evaluation

- Exact Match Domains (EMD)

- Additional Key Insights

What the Google Leak Revealed

NavBoost и Glue

The leak highlights NavBoost and its auxiliary system Glue as key components in Google’s ranking algorithms. More than 84 million internal references were reportedly found. The primary function of NavBoost is to collect and analyze user behavior data, including:

-

Clicks on search results: NavBoost tracks how often users click on each result, allowing Google to determine the popularity and relevance of pages. For example, if users consistently click a specific result for a given query, that page may be promoted to a higher position in the search results.

-

Time on page: The system measures how long users stay on a page after clicking. A quick return to search results can indicate that the page failed to meet user expectations, while longer engagement suggests higher content quality and relevance.

-

Subsequent user actions: NavBoost also examines post-click behavior. If users refine their query or continue browsing other results after visiting a page, that interaction data is factored into ranking adjustments.

According to testimony from former Google engineer Pandu Nayak, Glue extends NavBoost’s capabilities to include all elements on the search results page — videos, images, and other media. It integrates and evaluates these assets to provide a more holistic ranking assessment.

Practical Application of NavBoost

A practical example of how NavBoost functions can be seen in entity-based search behavior. Suppose users search for “Rand Fishkin,” fail to find the SparkToro website, then refine their query to “SparkToro” and click the official site. NavBoost may use this behavioral data to improve SparkToro’s ranking for the original query “Rand Fishkin.”

NavBoost also performs geographic segmentation of click data, considering user location (country, state/province) and device type (mobile or desktop). This enables more locally relevant results. For instance, identical queries may return different outcomes depending on a user’s region or device.

Together, NavBoost and Glue act as powerful behavioral modeling systems that help Google refine search quality and deliver more relevant, user-focused results worldwide.

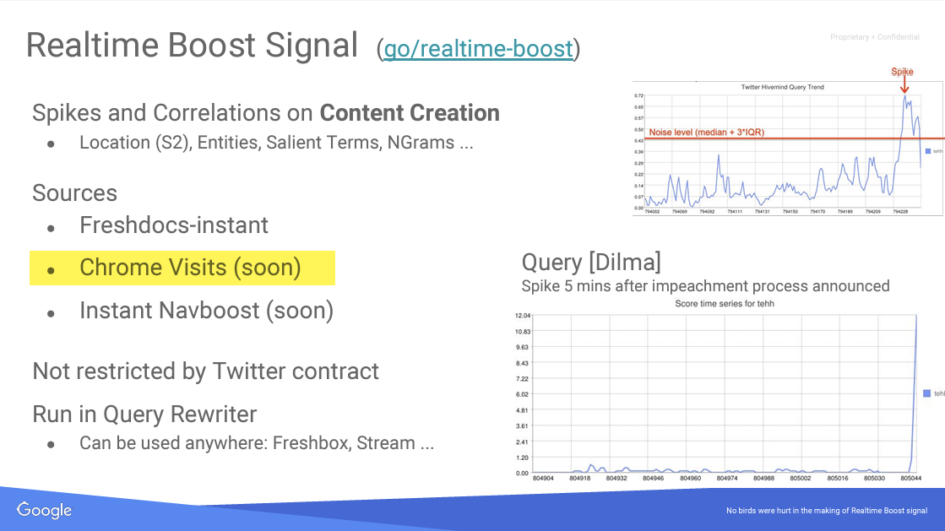

Chrome Data: How Clickstream Information Is Used in Google’s Ranking Algorithms

One of the most striking revelations in the leak is confirmation that Google actively uses user clickstream data collected via the Chrome browser to enhance its search algorithms. This behavioral data influences multiple aspects of ranking and relevance evaluation.

The leaked documents indicate that one of the motivations for developing Chrome was to give Google access to the complete clickstream of a substantial share of global internet users. This dataset includes detailed records of every visited URL, enabling Google to gain deep insights into user preferences and navigation patterns.

How Chrome Click Data Is Applied in Search Algorithms

According to the leaked materials, Google employs several types of Chrome-based metrics for analyzing both individual pages and entire domains. One example is the “top-URL” function, which evaluates page popularity based on aggregated Chrome click data.

A practical application of this can be seen in Sitelink generation — the additional internal links displayed below main search results. The documentation suggests that Chrome click data is used to determine which URLs are most frequently visited, helping Google select the most relevant pages to feature as Sitelinks.

The Sandbox for New Websites

The term “sandbox” has long been used within the SEO community to describe a probationary period during which a new website struggles to achieve high search rankings despite strong content and optimization.

The leaked documents confirm that Google does, in fact, implement such a mechanism. During the initial months after launch, new websites undergo heightened scrutiny and may be temporarily excluded from prominent search visibility while Google assesses their reliability and quality. This finding validates the long-suspected existence of a sandbox system used to preserve search result integrity.

Separate Ranking for Subdomains

Another major revelation concerns the independent ranking of subdomains. Although Google has repeatedly stated that subdomains are treated as part of the main domain, the leaked documentation suggests otherwise.

Subdomains can be evaluated and ranked independently from the primary domain. This enables Google to more accurately serve relevant content. For example, subdomains dedicated to specific topics or regions may achieve their own rankings separate from the main site.

The confirmation of both the sandbox mechanism for new websites and the separate ranking of subdomains provides a clearer understanding of how Google maintains search quality and ensures the relevance of its results. These findings allow SEO professionals to develop more effective strategies and improve website visibility in search results.

Domain Age

Previously, Google representatives claimed that domain age is not a significant ranking factor. However, the leaked documents reveal that Google does in fact consider this information when assessing a website’s quality and relevance.

Google collects domain age data from the date of registration. This includes both the registration date and the domain’s age at the time of the query. Such data helps the search engine evaluate a website’s stability and trustworthiness, as older domains are generally perceived as more authoritative and reliable.

Domain authority

Google has repeatedly stated that it does not use a “domain authority” metric as part of its ranking algorithm. Representatives such as Gary Illyes and John Mueller have publicly denied the existence of a metric similar to Moz’s “Domain Authority” or those used by other SEO tools.

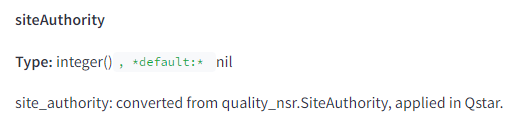

However, the leaked internal documentation suggests that Google uses a comparable metric known as “siteAuthority.” The documents reference an attribute named quality_nsr.SiteAuthority, which is applied within Google’s Qstar ranking system.

While the exact calculation and application of this metric remain unclear, its existence indicates that a domain-level authority factor is indeed part of Google’s ranking framework.

Whitelisting for Specific Query Categories

The leak also confirmed the existence of whitelists for certain types of content, such as COVID-19, democratic elections, and travel-related information. These whitelists ensure that users receive accurate, verified, and trustworthy information during critical global events or crises.

-

COVID-19: During the pandemic, Google implemented whitelists for websites providing credible and medically verified information about the virus.

-

Elections: During election periods, Google prioritized websites offering reliable election data to minimize misinformation and promote factual reporting. Sites included on these whitelists received a ranking advantage, allowing them to appear at the top of search results.

-

Travel: Google also applies whitelisting to travel content. Websites offering high-quality travel guidance, reviews, and destination recommendations can be included in these lists, enhancing their visibility.

Twiddlers are re-ranking functions applied after Google’s primary ranking algorithm (Ascorer) has produced initial results. They operate similarly to filters or hooks in WordPress, modifying output just before it’s presented to users. Twiddlers can adjust a document’s relevance score or modify its search result position. These functions play a critical role in several Google systems, including Panda and NavBoost.

Core functions of Twiddlers include:

-

Re-categorization: Limiting the number of results of a specific type to ensure diversity in the search results page.

-

Filtering: Promoting or demoting documents based on secondary signals such as content freshness or user experience.

-

Experimentation: Running live ranking experiments that test algorithmic changes in real time.

An anecdote from a former Google engineer illustrates their importance — when Twiddlers were accidentally disabled, it reportedly caused major issues with YouTube’s internal search system.

Anchor Mismatch

Anchor Mismatch is a mechanism used by Google to reduce the weight of backlinks when the anchor text does not match the content of the target page. This helps the search engine better evaluate link quality and prevents manipulation through irrelevant or spammy anchor text.

How Anchor Mismatch Works:

-

Anchor text analysis: Google analyzes the clickable text of each link to determine its relevance to the target page. The anchor text should accurately describe the content users will find.

-

Content comparison: Algorithms compare the anchor text to the target page’s primary keywords and topical focus. If there is a mismatch, the link’s value may be reduced.

-

Mismatch threshold: When the inconsistency between anchor text and page content exceeds a certain threshold, Google may treat the link as irrelevant or spammy, lowering its contribution to overall ranking signals.

Quality Raters and the EWOK System

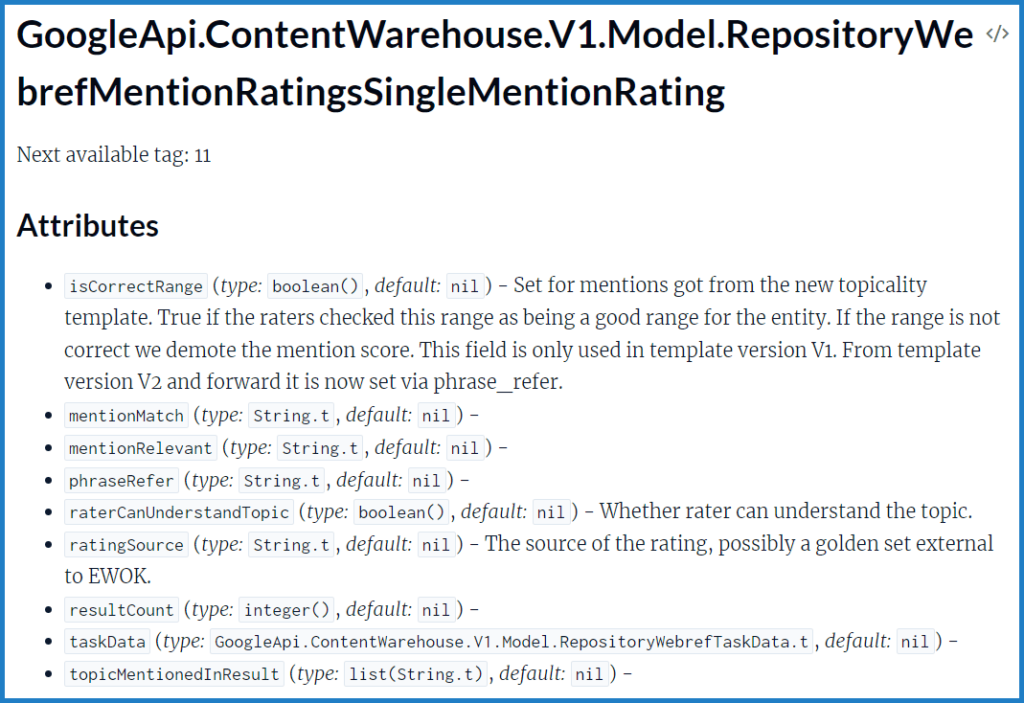

Another significant revelation involves Google’s quality rating system, known internally as EWOK. Data collected from Quality Raters plays an important role in evaluating websites and refining search results.

EWOK is an internal platform used to recruit and manage human raters who manually assess the quality and relevance of web pages. Raters work according to detailed guidelines developed by Google to ensure consistent and objective evaluations.

Quality Rater feedback influences rankings through several mechanisms:

-

Content relevance: Raters assess how well a page satisfies user intent. Sites that consistently receive high ratings can achieve better visibility.

-

Trust and credibility: Pages that provide reliable, fact-checked information—especially in sensitive areas such as health and finance—can be promoted in rankings.

-

User experience: Factors such as navigation, page load time, and intrusive advertising are evaluated. Sites offering a better user experience may receive higher rankings.

-

Feedback loop: Raters’ assessments are used to train and fine-tune Google’s algorithms, improving the relevance of future search results.

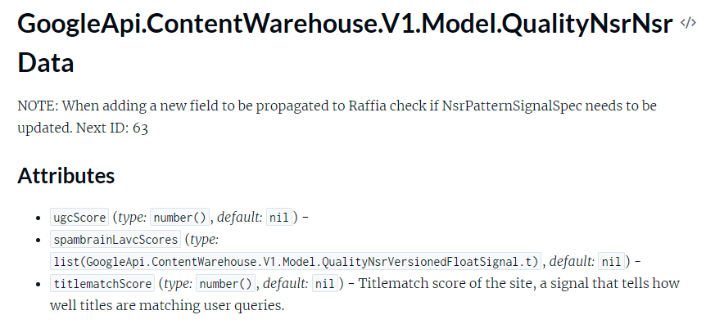

Title Matching Evaluation

The leaked documentation references a metric called “titlematchScore,” used to assess how closely a page’s title aligns with the user’s search query. This confirms long-standing assumptions among SEO specialists that well-optimized titles significantly influence search rankings.

How It Works:

-

Title relevance: When a user submits a query, Google’s algorithms compare each page title with the search intent and keywords. The closer the semantic match, the higher the titlematchScore.

-

Keyword positioning: Keywords placed near the beginning of the title carry greater weight, emphasizing the importance of front-loading primary terms.

-

Accuracy and uniqueness: Titles that precisely and uniquely describe page content achieve higher scores, while generic or repetitive titles can negatively affect rankings.

Exact Match Domains (EMD)

For many years, it was believed that domains containing exact match keywords ranked higher in search results. However, Google employs specific algorithms to reduce the ranking influence of Exact Match Domains that provide low-quality content.

If a domain name exactly matches a popular search query but the associated website lacks value, it may be demoted in rankings. For instance, domains like “best-cheap-laptops.com” or “buy-gold-jewelry.net” could be penalized if their content fails to meet Google’s quality standards. This policy aims to counteract manipulative practices that exploit keyword-based domain names.

Additional Key Insights

-

Font size consideration: Google analyzes average font size for terms in documents and anchor text as part of its assessment.

-

Domain registration data: Registration details are stored and may be used to determine sandbox conditions for new or recently modified domains.

-

Homepage influence: The homepagePagerankNs attribute affects the ranking potential of internal pages.

-

Short-form content ranking: Brief content is evaluated for originality before being ranked.

-

Content date validation: Google cross-checks publication dates in visible text, metadata, and URLs to confirm content freshness and accuracy.

For more details, see the full documentation here.